In the wish to integrate our Grünbeck water softener softliQ:SD18 into openHAB I tried the existing community binding with unfortunately no success. So I chose the MQTT way with the help of ioBroker. The way data transits from our water softener to openHAB might not be as straightforward as a direct binding, but it works just fine.

The data arrives in openHAB through the following workflow:

Water-softener => Grünbeck cloud => ioBroker => MQTT broker => openHAB

This might sound complicated, but in fact it is not. It just assumes certain requirements.

Requirements

To integrate data from your water softener into openHAB, you need to have the following appliances set up:

- a Grünbeck water-softener connected to the Grünbeck cloud

- an ioBroker instance (installation instructions)

- an MQTT broker (installation instructions)

- an openHAB instance (installation instructions)

1. Connect ioBroker to the Grünbeck cloud

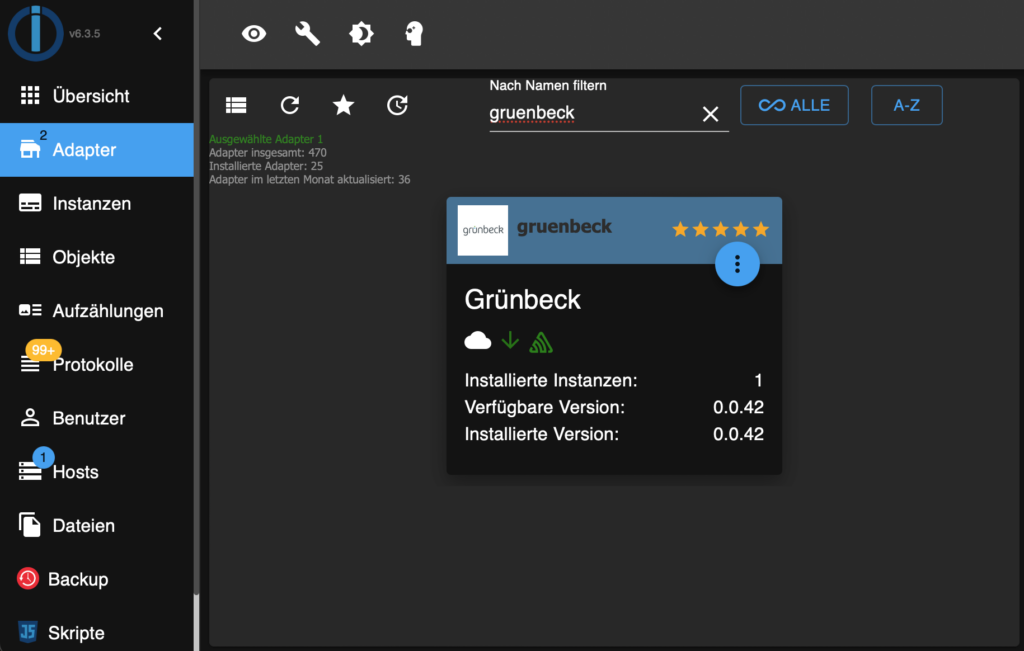

In order to receive data from your Grünbeck cloud in ioBroker, you have to install the Grünbeck adapter in ioBroker. In ioBroker, click on Adapter and search for Grünbeck. Just hit the vertical three dots and then click the “+”-sign.

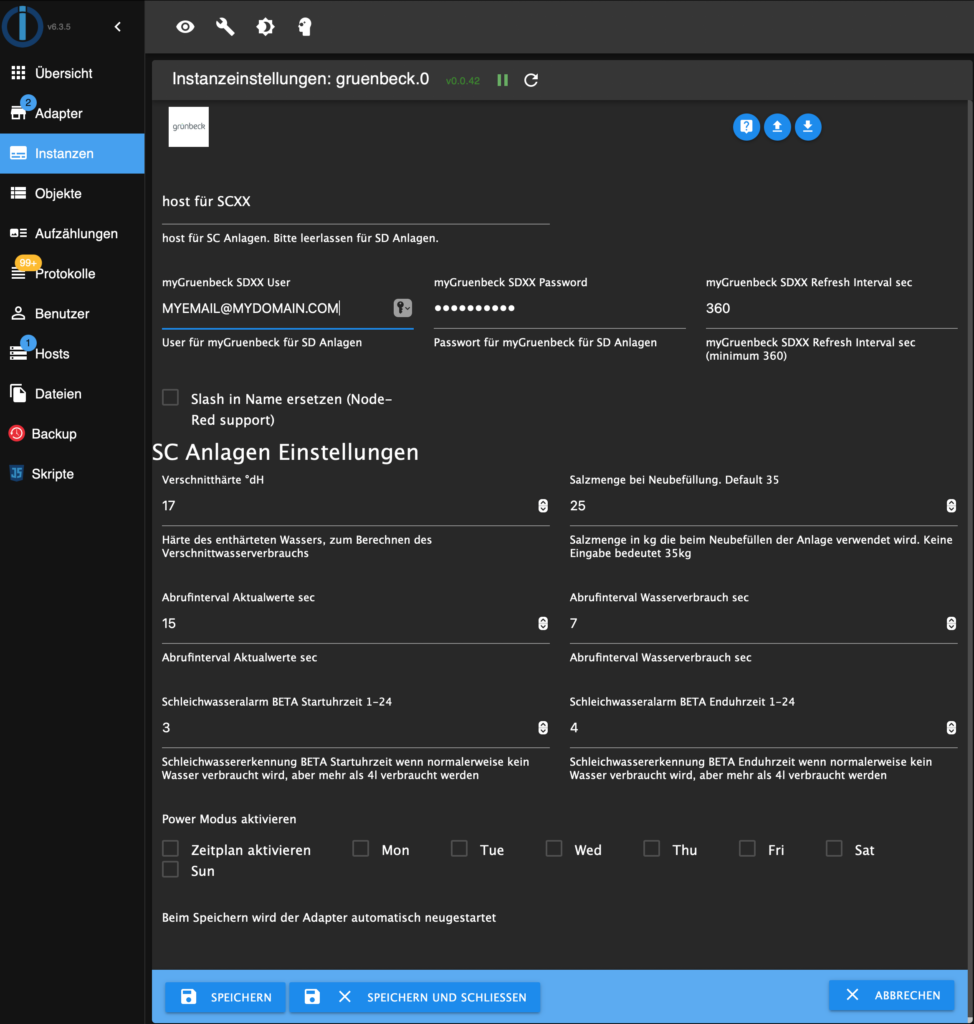

Now go to the Instances tab and fill in your connection data to the Grünbeck Cloud. Your Grünbeck Cloud Username and password are sufficient if you use an SD device.

2. Connect ioBroker to your MQTT broker

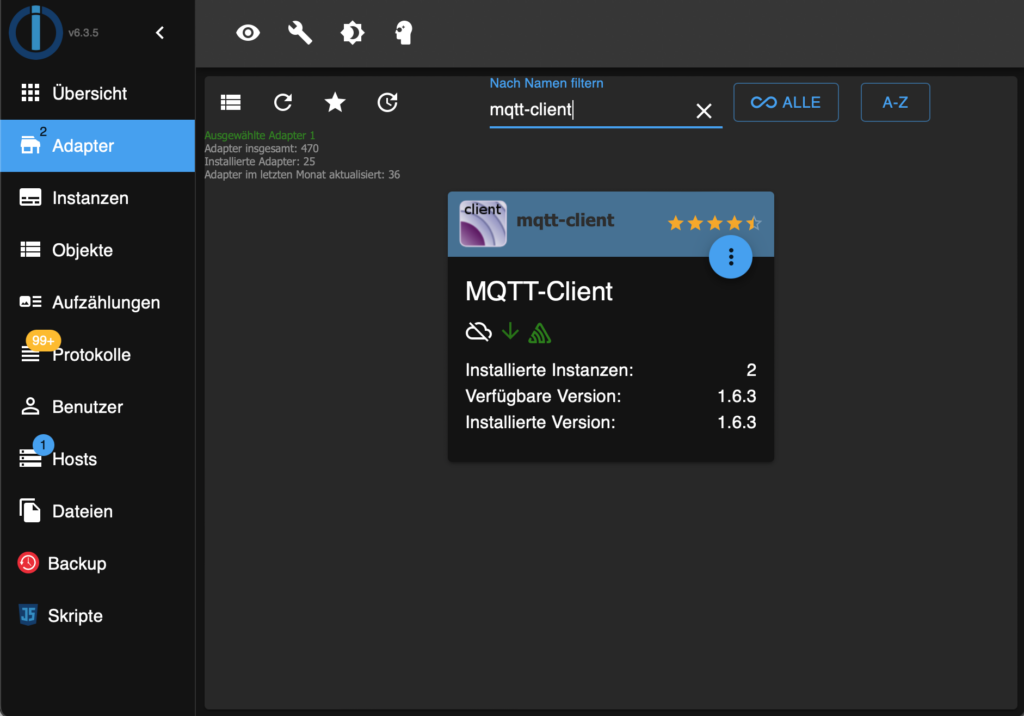

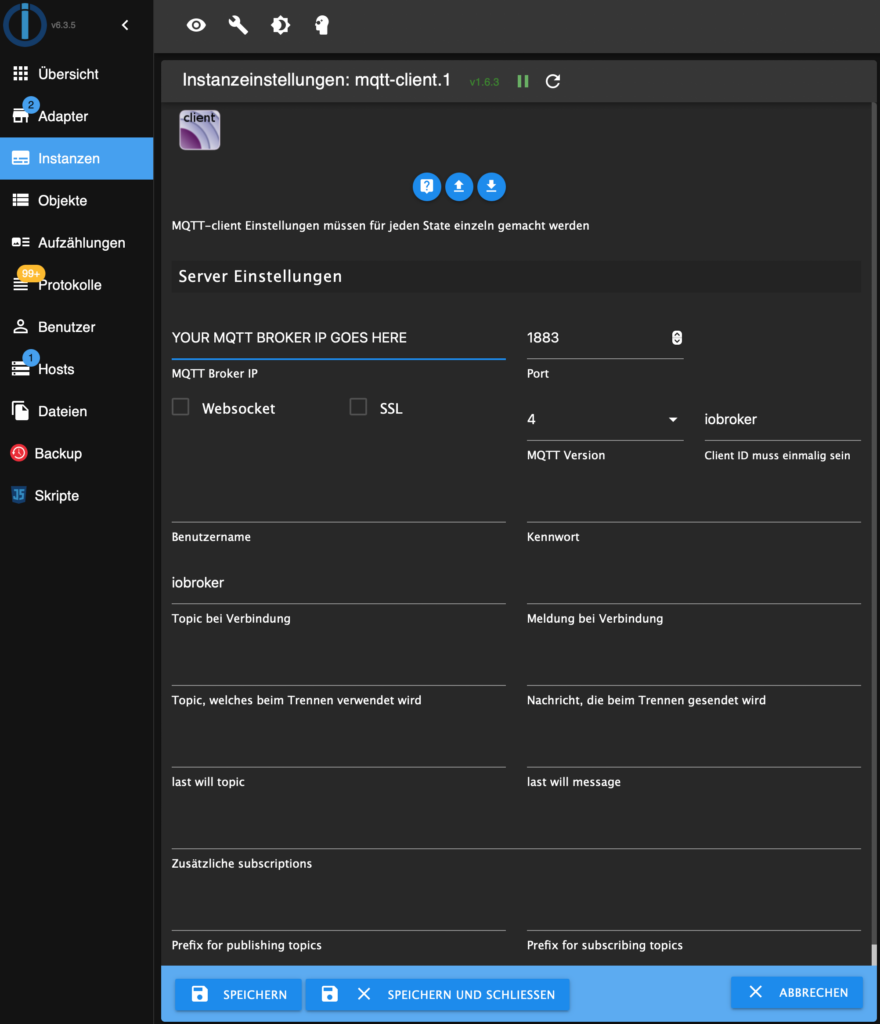

Install the MQTT-client adapter in ioBroker in the same way you did for the Grünbeck cloud adapter.

Configure the access data for your MQTT broker in the newly installed MQTT-client instance. MQTT broker IP-address and port are sufficient, if you do not use authentication.

3. Send your Grünbeck data to the MQTT broker

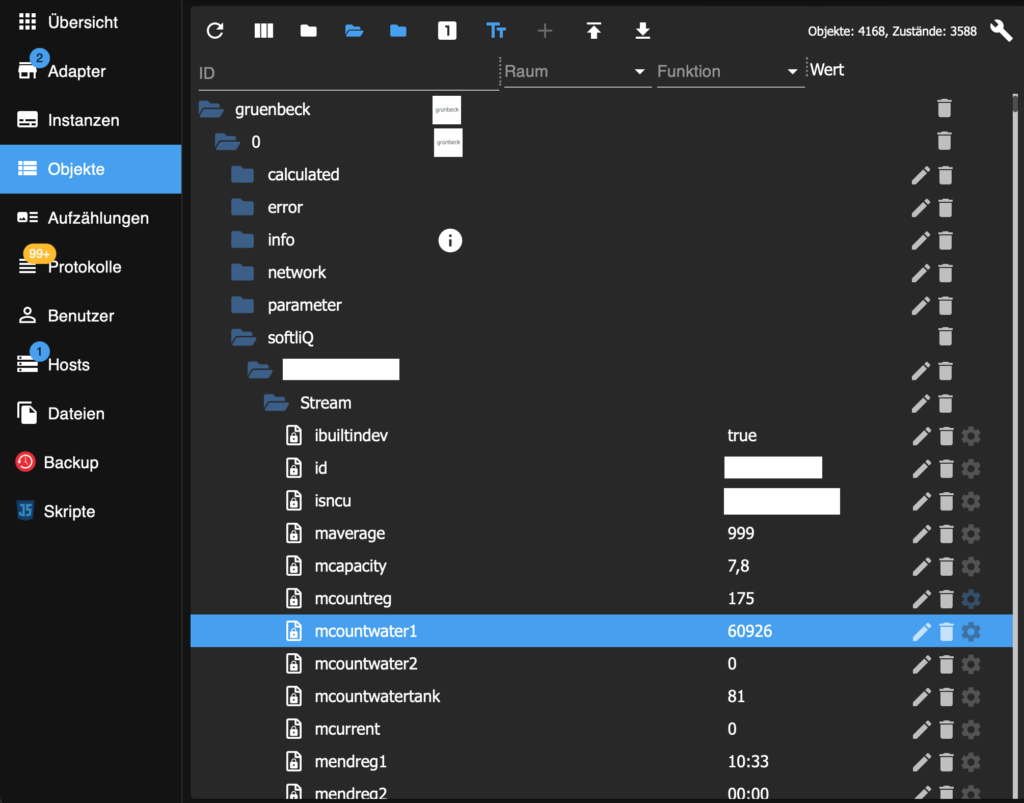

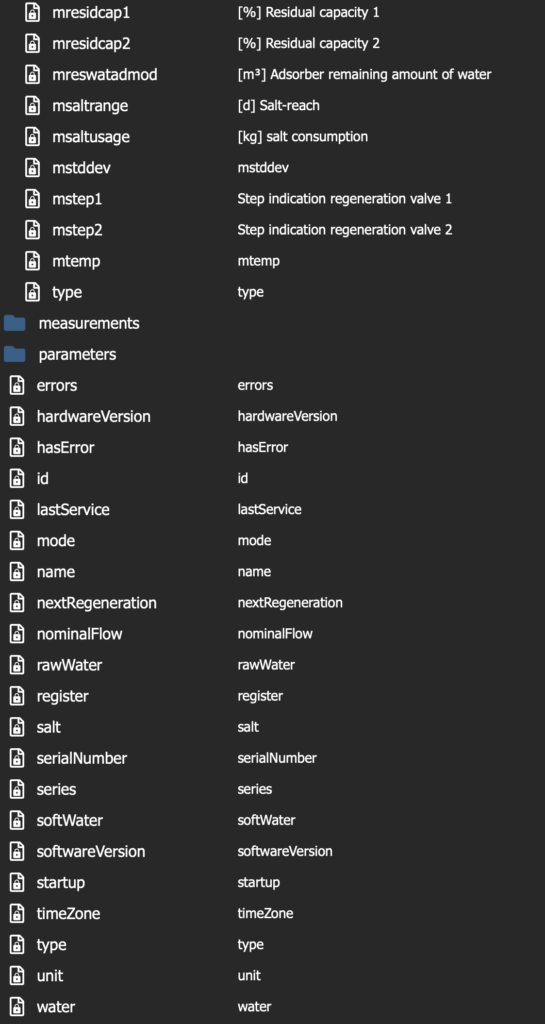

Now you have to tell ioBroker which information from the Grünbeck adapter you want to have sent to your MQTT broker. If your Grünbeck adapter is correctly configured, your object list should show similar data points like these:

The first subfolder under softliQ gives you information about your device (I hid the name of the folder as it is the serial number of the device). In my installation, this folder includes three subfolders named Stream, measurements and parameters. You have to browse these folders and find out what the different objects mean.

Let’s say you want to have the water counter sent to your openHAB instance. The Stream subfolder includes an object named mcountwater1. This object is the water counter (in liters). You have to click on the gear at the end of the line listing this object (see selected line in the above screenshot).

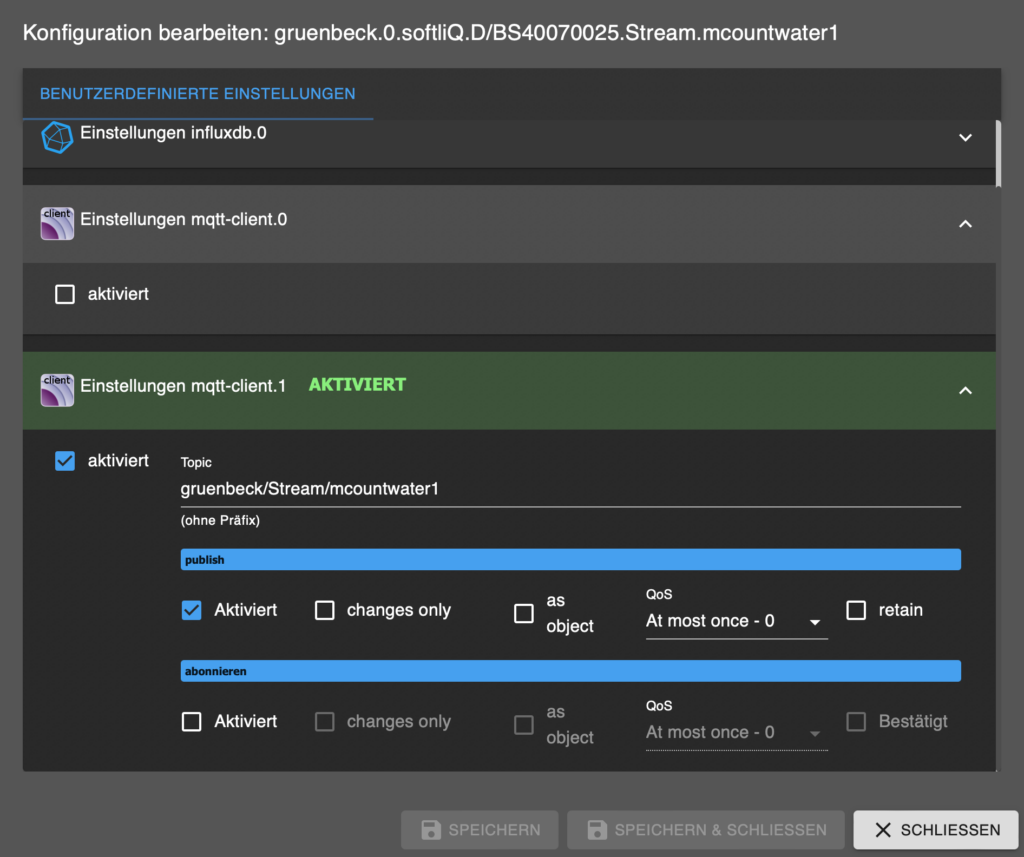

You should see a menu point for your MQTT client (probably mqtt-client.0, if you have only one MQTT client defined). Define a Topic for this object and activate it. You can freely make the topic tree in MQTT fit to your needs. ioBroker suggests quite a long one based on the structure in the object list. I prefer to reduce the number of subtopics to make it more easily readable.

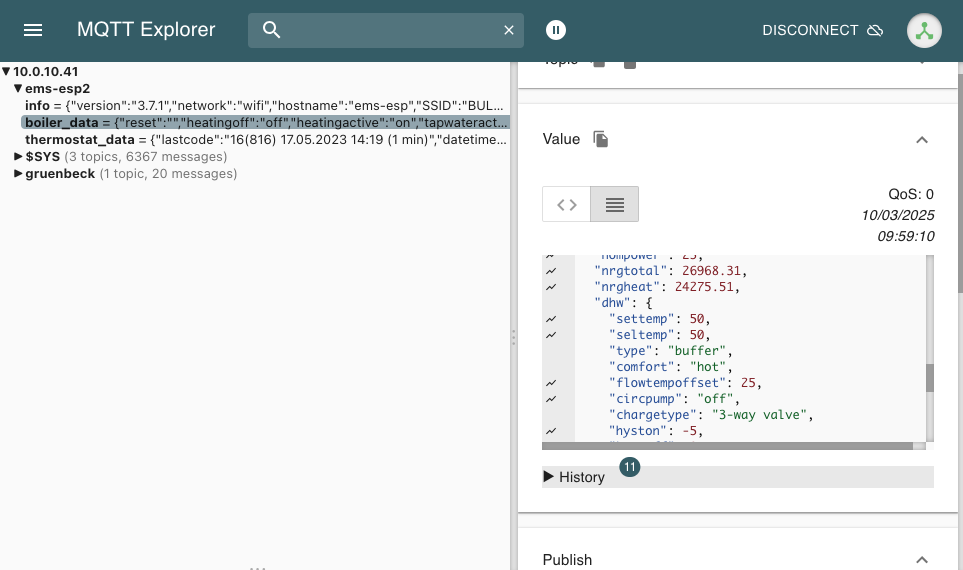

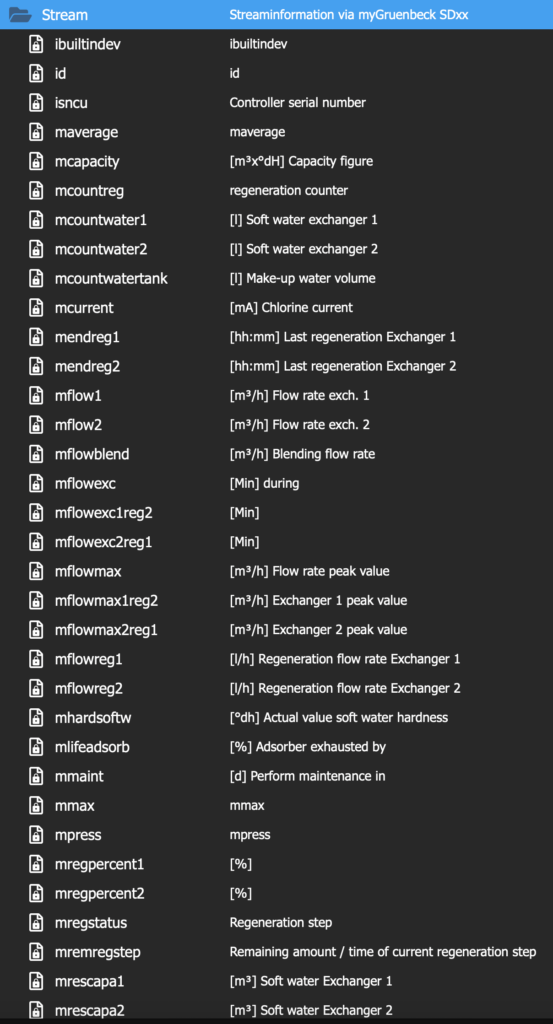

After saving the MQTT info, your MQTT browser (I use MQTT Explorer) should show the requested data in a few seconds. The following two images show what data points are available in the Stream folder and general folder for my SD18.

4. Configure openHAB to import the Grünbeck data from the MQTT broker

To read MQTT telegrams in openHAB, you need to install the MQTT binding. Go to openHAB’s administration settings, Add-ons: Bindings and install the MQTT binding.

To start, you need to add an MQTT Broker bridge. Go to the things tab and add a thing based on the MQTT binding. Select MQTT Broker. Give it a name (example: MQTT bridge). Enter the IP address of the MQTT broker. Save.

Now add a Generic MQTT Thing. Give it a name (example: Grünbeck water softener) and link it to the above created bridge. Save. We will use this thing to centralise all Grünbeck data from our water softener.

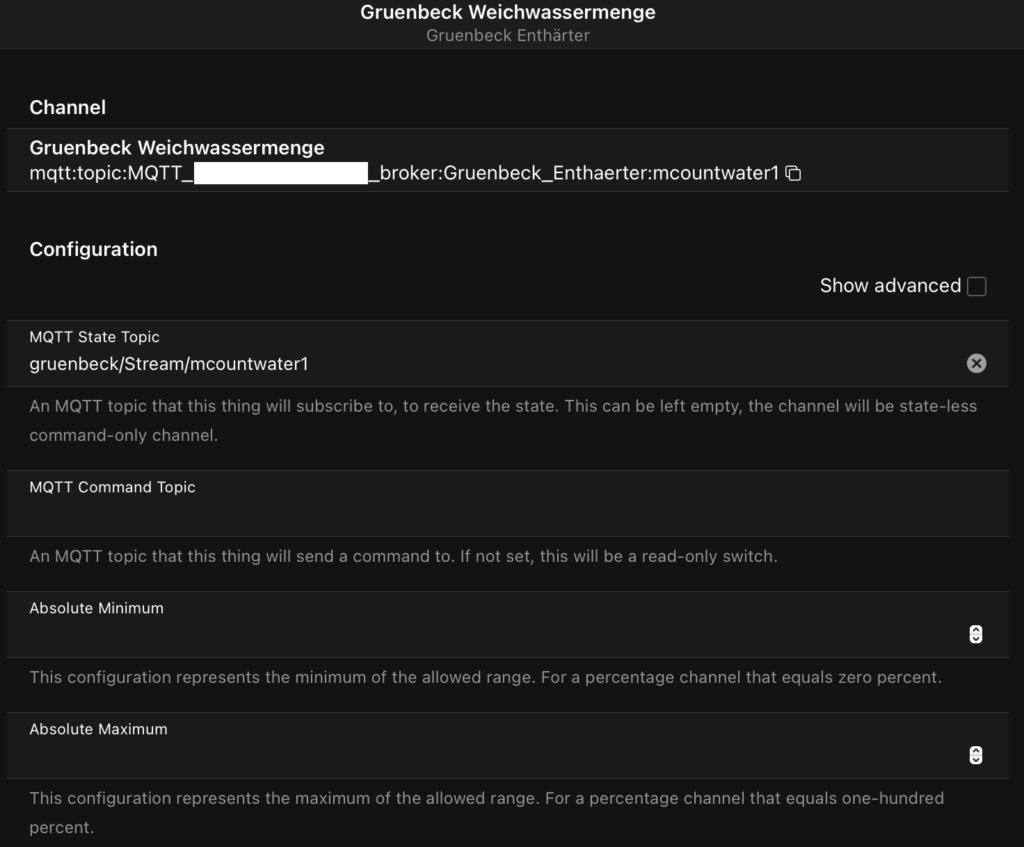

Now select the Channels tab in the thing you just created. Click on Add channel and enter for the MQTT State Topic the Topic information you defined in the MQTT settings for your Grünbeck object in ioBroker. If you like, you can add your Unit of Measurement (Show advanced). Click Done.

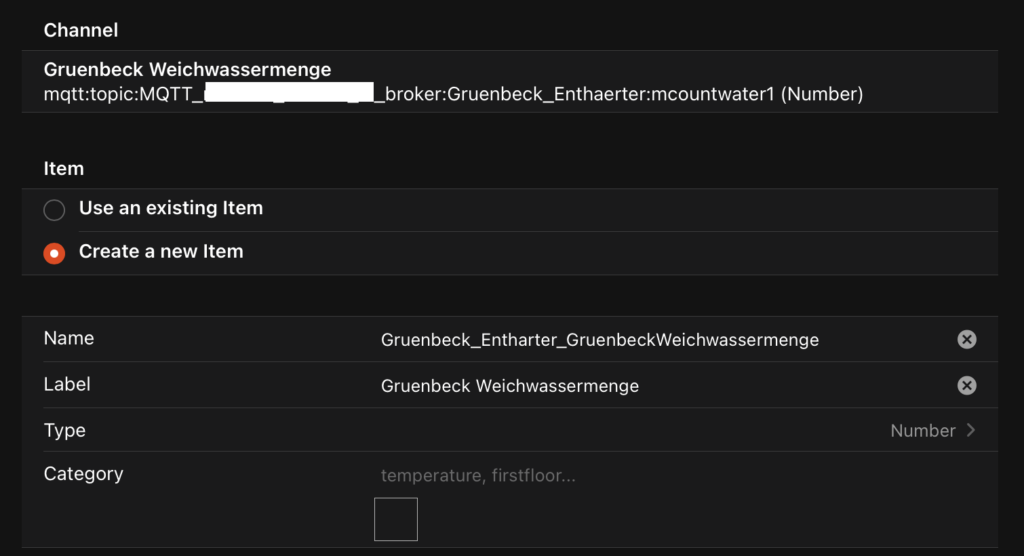

Now add a Link to an item to this channel.

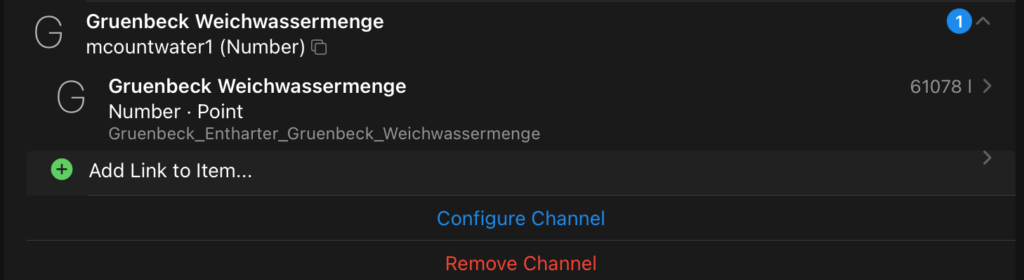

Now your item should show the amount of water treated by the water softener.

Add all the channels that you need and integrate the items to your sitemap in openHAB.